Graduated from MVA

Happy to have the congratulations of the jury !

The MVA master stands for “Mathématiques, Vision, Apprentissage” (Mathematics, Computer Vision, Machine Learning) and was one of the best place to learn a lot from the excellent researchers both theory and applications in machine learning.

One of the difficulty is to chose lectures when you would like to attend nearly every of them. I finally pick 11 of them for validating (minimum is 8). Here the list:

- Graphs in ML Very interesting lectures Tutorials allow to test various algorithms, with application on photo, real time face recognition and image segmentation. Spectral clustering, graph net. I study a paper that propose GenDice, a general stationary distribution correction estimation algorithm.

- Reinforcement Learning One of the very popular lecture of the master, it introduces basics, but also quite advanced topics in RL. Little coding in complement.

- Topological Data Analysis A good introduction to the domain, with not so easy tutorial. I studied the paper Progressive Wasserstein Barycenters of Persistence Diagrams

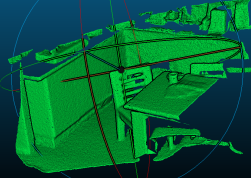

- Clouds and models An overview of reconstruction methods from clouds of points. Implementation in Python, so it was often to slow for real applications, but it was very clear. I did some experiments on the paper On Fast Surface Reconstruction Methods for Large and Noisy Point Clouds

- Optimal transport One of the most crowded lectures, as everyone wants to hear Gabriel Peyré ! I worked on the paper Optimal Transport to a Variety

- Kernel methods One of the famous lectures of the MVA, given by Julien Mairal and Jean-Philippe Vert. Really focus on the mathematical point of view.

- Convex Optimization Alexandre d’Aspremont really focuses on the mathematical understanding of the methods, and how convexity is often the limit between easy and hard problems. Even if the content seems more basic (I didn’t wait until my last year of Master to use Lagrangien and dual of linear problems.), it was a rich lecture.

- Large Scale Optimization Methods to optimize in parallel fashion, with implementations and derivation of the equations.

- Random Matrix Theory One of the most interesting and difficult lectures. How does spectral clustering works ? Or not ? Complex analysis provides answers and it is beautiful.

- Prediction for Individual Sequences Classic bandits and experts algorithms, with proofs on the blackboard.

- Convolutional Neural networks Lectures of Stéphane Mallat, he puts my lecture notes on the Course’ page