Ph.D. Dissertation

Scalable and Performant Graph Processing on GPU using Approximate Computing

"Although this may seem a paradox, all exact science is dominated by the idea of approximation."

"It is the mark of an instructed mind to rest assured with that degree of precision that the nature of the subject admits, and not to seek exactness when only an approximation of the truth is possible. "

Abstract

A multitude of real-world problems is modeled as graphs where nodes represent entities of interest, and edges capture the relationships among them. In recent years, Graphics Processing Units (GPUs) have gained popularity as accelerators for compute-intensive data-parallel applications due to the massive data-parallelism they offer and their high memory bandwidth. Graph algorithms are inherently irregular, while GPUs are best suited for structured data-parallel computation. Scaling graph algorithms is challenging today due to the rapid growth of unstructured and semi-structured data. The prior art has targeted parallelizing popular graph algorithms on various kinds of architectures such as multi-core CPUs, many-core GPU, and distributed and heterogeneous systems. The primary technical challenge posed by graphs is due to inherent irregularity in the data access, control-flow, and communication patterns. The recent past has witnessed the emergence of very effective techniques to represent graphs compactly, tame irregular computations, and efficiently map those to the underlying hardware. However, when the graph sizes are huge (e.g., billion-scale networks), or the underlying processing is expensive, it is not practically viable to compute the exact solution in time. The focus of this thesis is on making graph processing more scalable. We look to achieve this by enhancing the performance of parallel graph algorithms on GPU by trading off computational accuracy, using approximate computing. We propose several i) algorithm- and architecture-independent, ii) algorithm-independent but architecture-specific, and iii) algorithm-specific but architecture-independent techniques for enhancing parallel graph processing efficiency using approximate computing. We present Graprox, a set of four algorithm- and architecture-independent approximate computing techniques that improve the performance of parallel graph analytics by exploiting the general structure of the graph kernel and the flow of information in graph algorithms. Graph analytics on GPU suffers from low coalesced accesses, high memory latency, and high workload imbalance. To alleviate these issues, we present Graffix, a framework with three novel graph transformation techniques that alter the graph structure to enable faster processing in exchange for small inaccuracies in the final results. Graffix’s method for improving memory coalescing creates a graph isomorph that brings relevant nodes nearby in memory and adds a controlled replica of nodes. The second technique reduces memory latency by facilitating the processing of subgraphs inside shared memory by adding edges among specific nodes and processing well-connected subgraphs iteratively inside shared-memory. The third normalizes degrees across nodes assigned to a warp to reduce thread divergence. Third, we focus on algorithm-specific but architecture-independent approximate computing techniques for improved parallel execution. Specifically, we propose ParTBC, an ensemble of techniques targeted at speeding up the computation of topk betweenness centrality vertices on GPU. The proposals restrict the computation of shortest paths from only a fraction of the vertices in parallel betweenness centrality computation, using online stopping criteria to terminate the computation faster. All the proposals provide tunable knobs to change the degree of approximation injected and control the performance-accuracy trade-off in graph applications. Further, the approximate computing techniques complement (and do not compete with) the existing optimization techniques, and could be applied on top of these optimizations to enhance the execution performance further. We illustrate the efficacy of the proposed techniques on graphs of varying characteristics and sizes and popular graph algorithms through extensive experimental evaluation. Overall, we show that approximate computation of graph algorithms is a robust way of dealing with irregularities. Approximate computing combined with parallelization promises to make heavy-weight graph computation practical, as well as, scalable. [Download thesis]

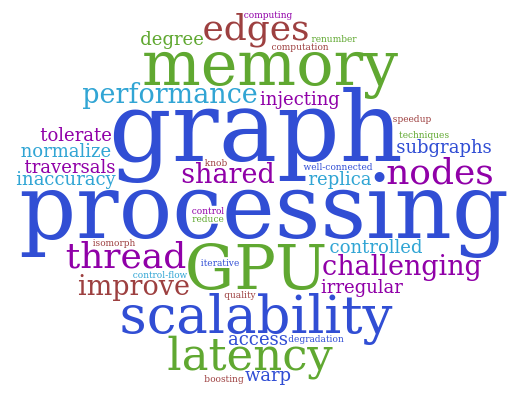

The tag-cloud provides an accurate representation of my Ph.D. research focus.