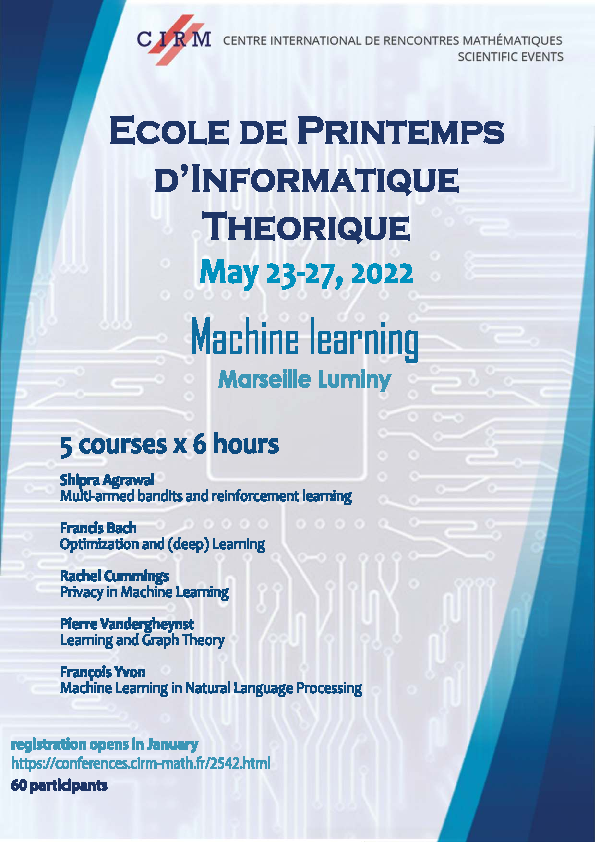

Theoretical Computer Science Spring School:

Machine Learning

École de Printemps d'Informatique Théorique : Apprentissage Automatique (EPIT), mai 2022

Lecture notes of the courses, by all participants.

The above videos contain the first hour of each course. They have been realized by Jean Petit, from CIRM. More information on the page Audiovisual Mathematics Library of the CIRM.

Description

At the heart of ”artificial intelligence”, Machine Learning is a very active, interdisciplinary research field involving various concepts of theoretical computer science and mathematics. The 2022 EPIT on Machine Learning aims at presenting modern and promising aspects of Machine Learning to interested, but possibly non-specialist young researchers. This includes in particular deep neural networks, a particular class of algorithms that has obtained unexpected and spectacular empirical successes which still need to be understood from a theoretical point of view; this requires statistics, approximation theory, functional analysis, statistical physics, etc. The 2022 EPIT is composed of five 6-hours lectures given by international specialists. They present a great variety, from very theoretical aspects to applications and theoretical answer to social expectations: an general introduction to Statistical Learning Theory by Shipra Agrawal, a course on Optimization and (Deep) Learning by Francis Bach, a tutorial on Learning and GraphTheory by Pierre Vandergheynst, a lecture on Privacy in Machine Learning by Rachel Cummings, and a modern presentation of Machine Learning in Natural Language Processing by François Yvon. This selection is also meant to build bridges with other topics and communities. Each participant was invited to give a short presentation of her/his research in a wide informal poster session.Lecture notes of the courses, by all participants.

Courses:

Shipra Agrawal (Columbia University) : Multi-armed bandits and reinforcement learning

In this tutorial I will discuss recent advances in theory of multi-armed bandits and reinforcement learning, in particular the upper confidence bound (UCB) and Thompson Sampling (TS) techniques for algorithm design and analysis.

Lecture notes by J. Achddou, Barrier, Chapuis, Ganassali, Haddouche, Marthe, Saad, Tarrade and Van Assel

Lecture notes by J. Achddou, Barrier, Chapuis, Ganassali, Haddouche, Marthe, Saad, Tarrade and Van Assel

Agrawal, Shipra (2022). Multi-armed bandits and beyond. CIRM. Audiovisual resource. doi:10.24350/CIRM.V.19921203

URI : http://dx.doi.org/10.24350/CIRM.V.19921203

Francis Bach (INRIA Paris & ENS Paris) : Optimization and (deep) Learning

In this short course, I will present the main optimization algorithms used in machine analysis together with their mathematical analysis, both in the convex case (linear models), and in the non-convex case (non-linear models).

Lecture notes by R. Achddou, Al Marjani, Brogat-Motte, Foucault, Graziani, Le Corre, Pierrot, Sentenac and Yang

Lecture notes by R. Achddou, Al Marjani, Brogat-Motte, Foucault, Graziani, Le Corre, Pierrot, Sentenac and Yang

Bach, Francis (2022). Optimization for machine learning. CIRM. Audiovisual resource. doi:10.24350/CIRM.V.19921303

URI : http://dx.doi.org/10.24350/CIRM.V.19921303

Rachel Cummings (Columbia University) : Privacy in Machine Learning

How can data scientists make use of potentially sensitive data, while providing rigorous privacy guarantees to the individuals who provided data? Differential privacy ensures that if a single entry in the database were to be changed, then the algorithm would still have approximately the same distribution over outputs. In this talk, we will see the definition and properties of differential privacy; survey a theoretical toolbox of differentially private algorithms that come with a strong accuracy guarantee; and discuss recent applications of differential privacy in major technology companies and government organizations.

Lecture notes byAhmadipour, El Ahmad, Jose, Lachi, Lalanne, Oukfir, Nesterenko, Ogier, Siviero and Valla

Lecture notes byAhmadipour, El Ahmad, Jose, Lachi, Lalanne, Oukfir, Nesterenko, Ogier, Siviero and Valla

Cummings, Rachel (2022). Privacy in machine learning. CIRM. Audiovisual resource. doi:10.24350/CIRM.V.19921503

URI : http://dx.doi.org/10.24350/CIRM.V.19921503

Pierre Vandergheynst (École Polytechnique Fédérale de Lausanne) : Learning and Graph Theory

There are a plethora of interesting applications that can leverage graph structured data, from drug discovery to route planning, and it is only natural that graph Machine Learning has attracted a lot of attention lately. We will review approaches in graph representation learning, leveraging intuition from graph signal processing to design and study graph neural networks and some of their recent extensions.

Lecture notes by Bardou, Brandao, Even, Gonon, Mitarchuk, Natura, Le Q-T, Pic, Shilov and Weber

Lecture notes by Bardou, Brandao, Even, Gonon, Mitarchuk, Natura, Le Q-T, Pic, Shilov and Weber

Vandergheynst, Pierre (2022). Machine learning on graphs. CIRM. Audiovisual resource. doi:10.24350/CIRM.V.19921603

URI : http://dx.doi.org/10.24350/CIRM.V.19921603

François Yvon (CNRS - Université Paris-Saclay) : Machine Learning in Natural Language Processing

This talk is a short introduction to the automatic processing of utterances in natural language, presenting the various challenges that need to be addressed to handle the difficulties of human languages.

Lecture notes by Blanke, Daoud, Duchemin, Gourru, Jhuboo, Jourdan, Lauga, Mercklé, Sandberg and Terreau

Lecture notes by Blanke, Daoud, Duchemin, Gourru, Jhuboo, Jourdan, Lauga, Mercklé, Sandberg and Terreau

The above videos contain the first hour of each course. They have been realized by Jean Petit, from CIRM. More information on the page Audiovisual Mathematics Library of the CIRM.